Introduction

DeepFake AI Statistics: DeepFake technology, powered by the latest Generative AI, has become a present and rapidly escalating crisis for global security, finance, and personal trust.

This technology uses deep learning algorithms to create hyper-realistic, convincing mimics in video, audio, and image content, making people appear to say or do things they never did. I’d like to discuss further about its end-to-end analysis in this in-depth DeepFake AI statistics.

Letting you know how the ease of creating high-quality synthetic media has put a tool for fraud and misinformation directly into the hands of bad personalities. So, analysing the DeepFake AI data is the first, most crucial defense you can do today.

Our analysis reveals an increasing arms race where the volume and synthetic content are outpacing our collective ability to detect it, driving billions in fraud losses to digital trust. This report presents the most current and rising figures impacting markets, individuals, and corporate security as we head into 2026. Let’s get started.

Editor’s Choice

- The volume of deepfake files shared online is projected to increase by 900% annually, escalating the digital trust crisis to an unprecedented scale.

- Deepfake-related fraud attempts experienced a dramatic surge of 3,000% in 2023, confirming the technology’s primary weaponization is financial crime.

- A single corporate deepfake scam successfully resulted in a loss of USD 25 million from a major engineering firm in 2024, proving its effectiveness in impersonation fraud.

- Automated detection systems suffer a disastrous 45 to 50% drop in accuracy when moving from controlled lab conditions to actual deepfake challenges.

- Financial losses in the U.S. driven by Generative AI fraud are expected to reach USD 40 billion by 2027, growing at a 32%

- An estimated 96% of deepfake pornographic content found online is non-consensual intimate imagery, overwhelmingly targeting women and underscoring a severe ethical failure.

- The global Deepfake AI market is forecast to reach USD 19.8 billion by 2033, illustrating the enormous economic value in both creating and detecting synthetic media.

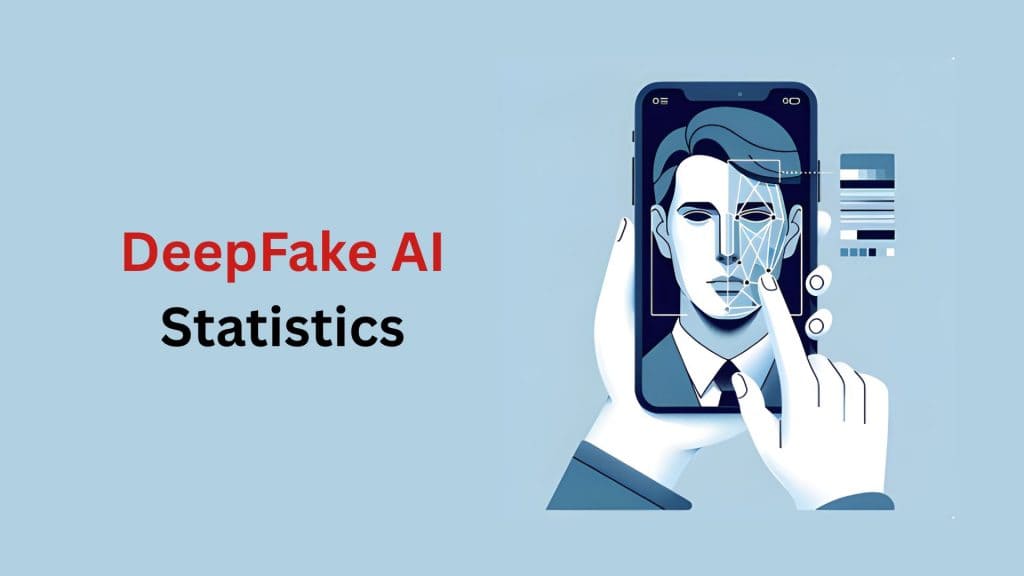

Deepfake Content Proliferation

(Reference: researchgate.net)

(Reference: researchgate.net)

- The number of deepfake files circulating on the internet is projected to hit 8 million in 2025, a massive increase from approximately 500,000 in 2023.

- The detection of malicious deepfake incidents saw a tenfold 10x increase globally between 2022 and 2023, signaling a critical shift from hypothetical risk to daily reality.

- The total volume of deepfake videos surged by an alarming 550% between 2019 and 2024, demonstrating the relentless pace of creation.

- The first quarter of 2025 alone saw 179 deepfake incidents reported, which represents a 19% rise compared to the entire volume of incidents recorded in 2024.

- The number of online deepfake videos has been observed to roughly double every six months, highlighting the geometric nature of this content growth.

- Deepfakes now account for 6.5% of all fraud attacks, an astonishing 2,137% increase since 2022, confirming their rapid adoption by organized crime.

- Over 500,000 video and audio deepfakes, many targeting political narratives, were reportedly shared across social media platforms in 2024.

- The face swap category, a common deepfake technique, experienced a massive 704% increase from the first to the latter half of 2023.

| Metric | Detail Data | Implication |

| Projected Files 2025 | 8 Million |

Near-total saturation of the digital content space. |

|

Growth Rate Annual |

900% | Exponential acceleration, challenging mitigation efforts. |

| Incident Surge 2022-2023 | 10x Increase |

Critical transition from novelty to mainstream threat. |

|

Fraud Attempts 2023 Spike |

3,000% Increase | Deepfakes are the preferred tool for sophisticated fraud. |

| Video Volume Growth 2019 to 2024 | 550% Increase |

Focus is shifting heavily toward video and audio synthesis. |

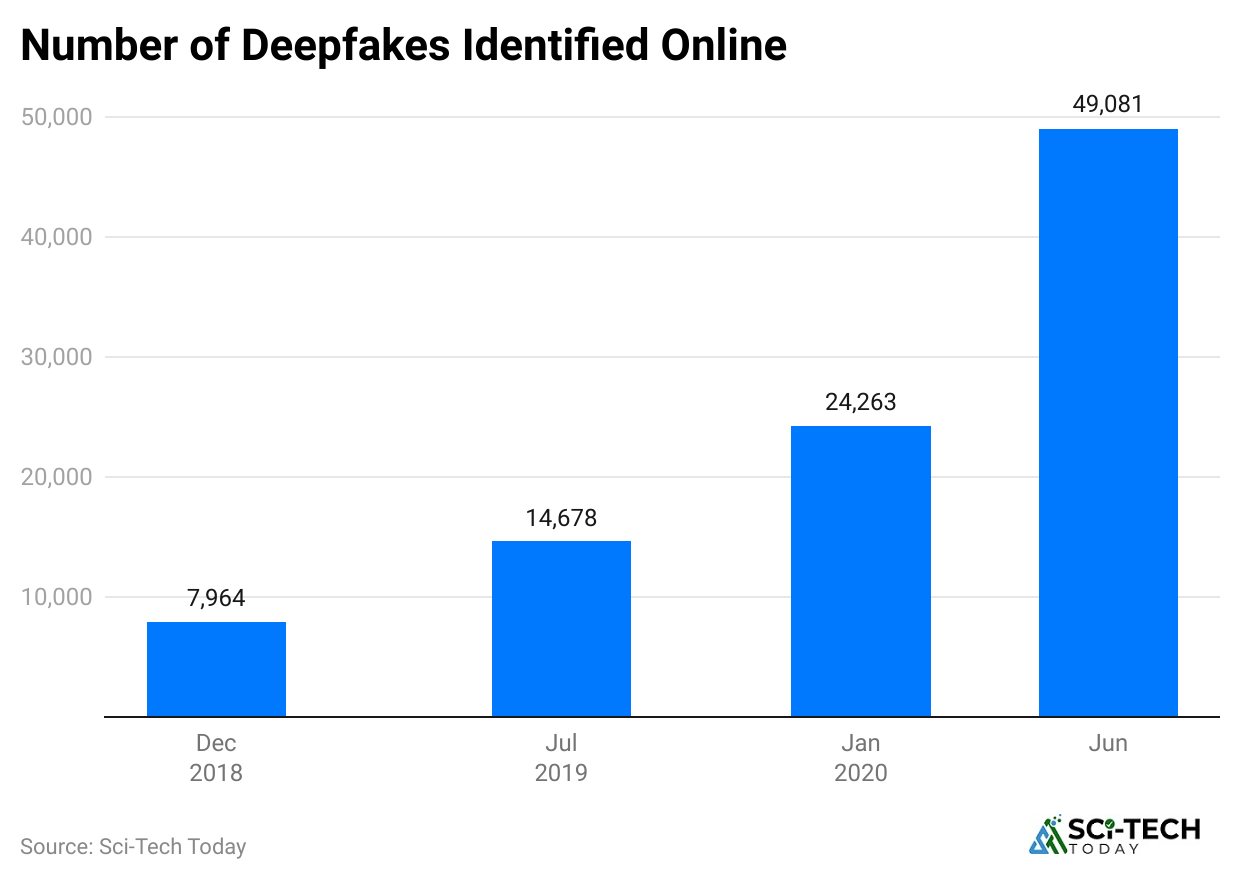

Deepfake Financial Fraud Statistics

(Reference: regulaforensics.com)

(Reference: regulaforensics.com)

- Businesses lost an average of nearly USD 500,000 per deepfake-related incident in 2024, with larger enterprises suffering losses up to USD 680,000.

- Financial losses in North America alone exceeded USD 200 million in the first quarter of 2025 due to deepfake-enabled fraud, showing the extreme cost of this new attack vector.

- Generative AI-facilitated fraud losses in the U.S. are projected to climb from an estimated USD 12.3 billion in 2024 to USD 40 billion by 2027, a CAGR of 32%.

- An alarming 92% of companies have already experienced some form of financial loss due to a deepfake incident.

- Fintech businesses, despite their technological focus, reported significantly higher average losses of over USD 630,000 per deepfake incident, outpacing traditional industries.

- The Crypto sector remains the single largest target, accounting for a massive 88% of all deepfake fraud cases detected, due to the high-value, irreversible nature of its transactions.

- Scammers need as little as three seconds of audio of a target’s voice to create a convincing, high-quality voice clone for use in immediate, high-pressure phone scams.

- A significant 77% of individuals who were successfully tricked by a deepfake scam ended up losing money, with one-third of these victims losing over USD 1,000.

| Financial Metric | Details | Implication |

| Average Loss per Incident | USD 500,000 | The high-stakes, high-return nature of deepfake fraud. |

| Projected Loss US 2027 | USD 40 Billion | Catastrophic systemic failure without robust defense. |

| Companies Experiencing Loss | 92% | Threat is ubiquitous, not limited to specific sectors. |

| Crypto Sector Share | 88% | Urgent need for enhanced verification in high-risk transactions. |

| Synthetic Voice Fraud Insurance | 475% Increase 2024 | Insurance and other heavily regulated sectors are now major targets. |

Human vs. AI Accuracy

(Reference: stanford.edu)

(Reference: stanford.edu)

- A meta-analysis of over 50 papers found the total deepfake detection accuracy for humans is only 55.54%, which is statistically barely above random chance 50%.

- Advanced, multimodal AI systems are currently achieving impressive 94 to 96% accuracy rates when operating under optimal, controlled conditions.

- State-of-the-art automated detection systems experience a catastrophic 45 to 50% accuracy drop when they encounter deepfakes in actual environments compared to laboratory conditions.

- Human accuracy for detecting deepfake images is particularly low, hovering at just 53.16%, highlighting the deceptive simplicity of synthetic photos.

- The global market for deepfake detection technology is predicted to grow by 42% annually to reach USD 15.7 billion by 2026.

- A recent study revealed that a minuscule 0.1% of consumers could accurately identify all deepfake and real content images and videos in a test.

- Humans can only correctly detect voice cloning or speech deepfakes approximately 73% of the time, leaving a significant gap for sophisticated voice-based social engineering scams.

- Leading, dedicated AI detection models achieve a reported detection accuracy rate of around 84%.

| Detection Metric | Detail Data | Implication |

| Overall Human Accuracy | 55.54% | Humans are fundamentally unreliable as a primary defense layer. |

| AI Actual Accuracy Drop | 45 to 50% Fall | Deepfake creators are successfully optimizing against current models. |

| Detection Market CAGR | 42% to 2026 | Enormous, urgent investment is being channeled into defense technology. |

| Perfect Detection Rate Human | 0.1% | The threat is practically invisible to the average consumer. |

| Audio Detection Accuracy Human | 73% | Voice cloning presents a high-risk vector for direct impersonation. |

Deepfakes and Exploitation

(Source: iproov.com)

(Source: iproov.com)

- 96% of deepfake pornographic content circulating online is non-consensual intimate imagery NCII, a direct form of digital gender-based violence.

- Approximately 99% of the individuals featured in pornographic deepfakes are women, cementing the gendered nature of this exploitation.

- A one-minute deepfake pornographic video can be produced in under 25 minutes with no cost and requires as little as a single clear image of the target.

- The vast majority of deepfakes found online 98% are not political or entertainment-related but are dedicated to pornographic content, contrasting sharply with public perception.

- Nearly all 94% of the individuals featured in deepfake NCII are drawn from the entertainment industry, including celebrities and influencers, whose public images provide easily accessible source material.

- Approximately one-third 33% of readily available deepfake creation tools and platforms allow or facilitate the generation of pornographic content.

- The price for a good-quality, customized deepfake video on the dark web can range from USD 300 to USD 20,000 per minute.

| NCII Metric | Details | Implication |

| NCII Content Share | 96% | The primary and most pervasive misuse of the technology. |

| Victim Gender Share | 99% Women | A tool weaponized almost exclusively for digital misogyny. |

| Creation Time | Less than 25 Minutes | Ease and speed enable mass production and distribution. |

| Non-Pornographic Share | 2% | The focus on political and financial deepfakes distracts from the main crisis. |

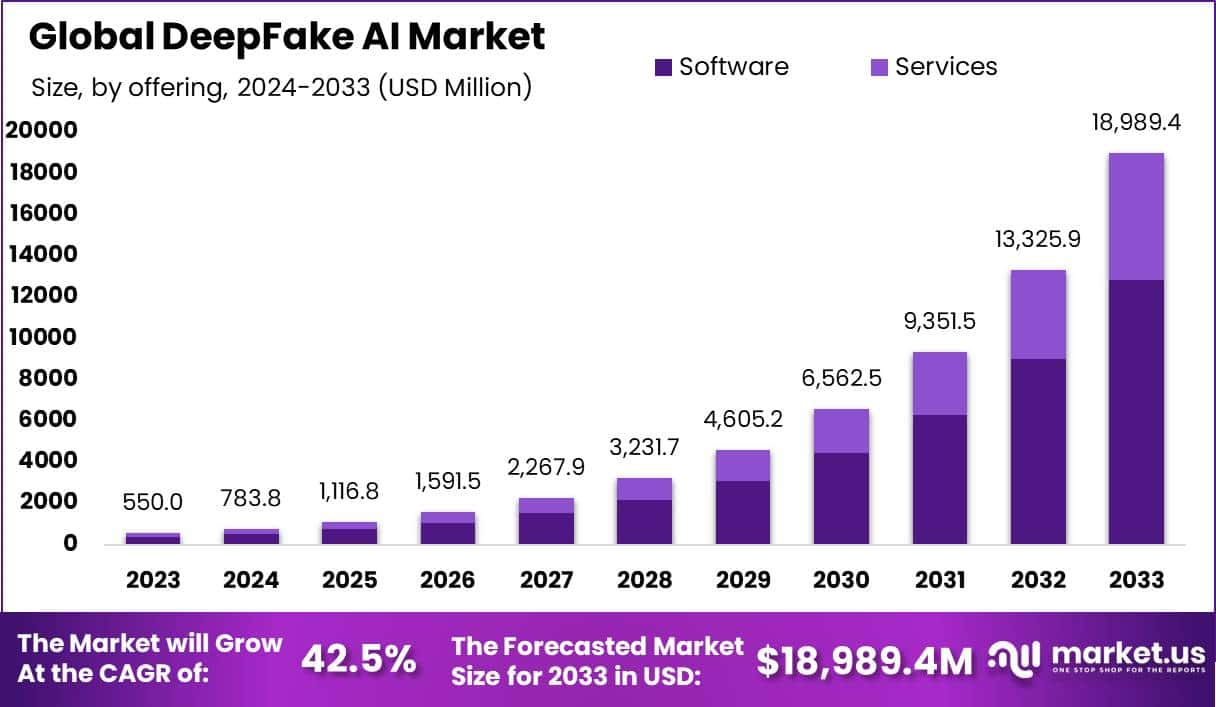

DeepFake AI and Detection Market Sizes

(Source: market.us)

(Source: market.us)

According to Market.us, the deepfake has created a massive, dual-sided market: the growth of the technology itself and the urgent security market to counter it. These are two of the fastest-growing sectors in the AI economy.

DeepFake AI Market Growth

- USD 19.8 Billion Projection: The global Deepfake AI market is forecast to surge from USD 764.8 million in 2024 to USD 19,824.7 million by 2033, representing a spectacular CAGR of 44.3%.

- BFSI Fastest-Growing: The Banking, Financial Services, and Insurance BFSI vertical is expected to be the fastest-growing segment, projected at a CAGR of 48.4% from 2025 to 2033.

- Image Dominance: The Image Deepfake segment led the market in 2024, securing the largest revenue share at 53.0%.

- Software Leadership: The Software segment captured the largest revenue share in 2024 at 64.4%.

- GANs Technology Leader: Generative Adversarial Networks GANs, the core technology for producing hyper-realistic fakes, accounted for the largest technology market share in 2024.

DeepFake Detection Market Growth

- USD 5.6 Billion Detection Market: The deepfake detection market is expected to grow from USD 168.7 million in 2025 to USD 5,609.3 million by 2034, with a CAGR of 47.6%.

- North America Dominance: North America held the largest market share in the deepfake detection space in 2024, capturing over 42.6% of the total market.

- Video & Image Segment: The Video and Image Deepfake Detection segment dominated the market in 2024, taking more than 66.7% of the share.

- Cloud-Based Solutions: The Cloud-based segment led the deepfake detection market in 2024, holding over 61.8% of the share, due to the need for scalable and flexible detection tools without massive infrastructure costs.

- Media & Entertainment: The Media and Entertainment segment dominated the end-user market in 2024, securing over 49.2% of the detection market, as content creators strive to protect intellectual property and authenticity.

| Market Segment | DeepFake AI Creation | DeepFake Detection Security |

| CAGR to 2033/2034 | 44.3% | 47.6% |

| 2024 Dominant Segment Type | Image Deepfake 53.0% | Video & Image Detection 66.7% |

| Fastest Growing Vertical | BFSI 48.4% CAGR | BFSI High fraud focus |

| 2024 Regional Leader | North America 34.8% Share | North America 42.6% Share |

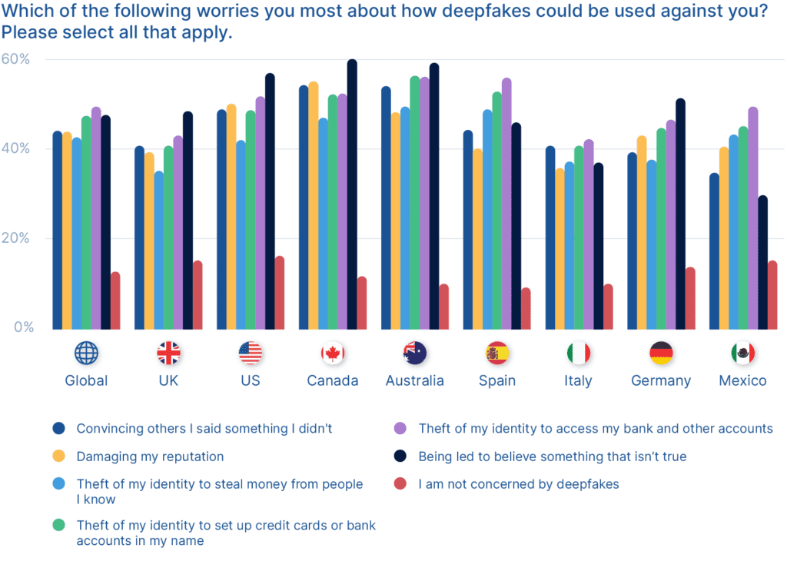

Trust, Fear, and Preparedness

(Reference: researchgate.net)

(Reference: researchgate.net)

- A substantial 72% of consumers reported worrying on a day-to-day basis about being tricked by a deepfake into handing over sensitive information or money.

- Despite the alarming data on low human accuracy, 60% of consumers still believe they could successfully spot a deepfake.

- Over half of businesses, 53% reported being successfully targeted by deepfake scams.

- A massive 85% of corporate finance professionals now classify deepfake scams as an existential threat to their organization’s financial security.

- Despite the surging fraud figures, a concerning 37% of business executives believe deepfakes pose no risk to their companies.

- Nearly one-third 32% of business leaders lack confidence in their own staff’s ability to recognize and properly respond to deepfake fraud attempts targeting their organization.

- Approximately one-third 33% of individual victims who lost money to a deepfake scam reported losing more than USD 1,000.

- Due to the prevalence of deepfakes, 49% of consumers reported having less trust in social media platforms and the content shared there.

| Perception Metric | Detail Statistic | Implication |

| Consumer Daily Concern | 72% | Widespread erosion of digital confidence and trust. |

| Executive Confidence Gap | 37% Believe No Risk | Critical operational and security blind spots in leadership. |

| Consumer Overconfidence | 60% Believe They Can Detect | The public is largely unprotected due to self-deception. |

| Staff Detection Confidence | 32% Lack Confidence | Corporate defense depends on untrained personnel. |

| Trust in Media | 49% Decline in Social Media Trust | Societal trust in information is fundamentally undermined. |

Conclusion

Overall, these DeepFake AI statistics outline an urgent need for systemic defense. The data is clear: the creation of synthetic media is exponential, the financial losses are catastrophic, and our collective human and automated detection abilities are lagging significantly.

The battle against deepfakes is for the integrity of our information, the security of our finances, and the foundational trust in our digital interactions. So use these statistics as your most valuable assets. They are the data points that empower you to ensure better security, invest in advanced detection, and implement human-centric verification protocols that cannot be breached by an AI clone.

By understanding the scale and velocity of this challenge, you are already one step ahead in protecting your business and your digital identity. Thank you for diving into this critical data with us.

I hope you like this article. If you have any questions, kindly let us know, and we will try to answer them ASAP. I also want you to give me suggestions regarding this article. Thanks for staying up till the end.